- What We Do

- Agriculture and Food Security

- Democracy, Human Rights and Governance

- Economic Growth and Trade

- Education

- Environment and Global Climate Change

- Gender Equality and Women's Empowerment

- Global Health

- Humanitarian Assistance

- Transformation at USAID

- Water and Sanitation

- Working in Crises and Conflict

- U.S. Global Development Lab

Speeches Shim

FEATURED |

|---|

A Discovery Report on Learning in USAID/WashingtonThis PPL/LER compendium of learning approaches that are working in USAID was developed based on 80 in-depth, semi-structured interviews with USAID employees from 49 separate offices across 10 bureaus. |

ALSO SEE |

|

Evaluation Feedback for Effective Learning and Accountability |

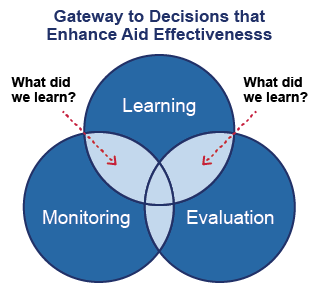

USAID views collaborating, learning, and adapting as important drivers throughout the Program Cycle, with designs for new program and projects building on evidence from evaluations of earlier work, and aid effectiveness being enhanced over the life of a program or project based on feed back from performance and assumption monitoring as well as by evaluations undertaken during implementation.

This section of the E3 MEL Toolkit links monitoring and evaluation to learning on a real-time basis and focuses on steps that Missions and other operating units can take to ensure that MEL results are utilized to enhance partner relationships, support decision-making and foster development effectiveness. The section includes four subsections, as listed below. You can skip directly to any section that interests you, or follow through the section sequentially by using the "next" command at the bottom of each page. Practical ideas for maximizing Agency and partner learning from monitoring and evaluation are highlighted in USAID and OECD reports shown in the side bar on this page. Optional templates that support USAID's post-evaluation review and action process are also provided in this section.

- Reflect and Act on Monitoring Feedback

- Hold Post-Evaluation Action Reviews

- Documenting Evaluation Use

- Meta-Analyses, Evaluation Summits & Systematic Reviews

Learning from monitoring and evaluation isn't automatic. USAID field staff have a variety of reports they are expected to forward to USAID/Washington each year. Annual performance reports and evaluation reports are part of this flow. Yet when, as one USAID Deputy Director once put it, a Mission is simply a railroad station through which reporting flows to USAID's Washington headquarters, learning is lost, even though expectations about accountability may be well-served. Bringing learning and accountability into a better balance is the clear intent of USAID's Program Cycle Guidance and a suite of policy reforms that support it, including USAID's CDCS Guidance, its Project Design Guidance, its Evaluation Policy, revisions to ADS 201 and a host of planned Technical Notes and How-To Notes from PPL/LER.

Integrating Monitoring, Evaluation and Learning occurs primarily at three key points in these processes: (a) during project design when lessons from prior evaluations are assembled to guide program and project development, (b) when utilization-focused plans for performance monitoring and evaluation are developed, and (c) when performance data and evaluation reports become available and their findings and lessons can be absorbed and applied. This section of the kit focuses on the last of these points.

Maximizing the value from monitoring data and evaluation reports as they are received starts with asking "what did we learn?" Often what MEL data and reports have to teach us is mixed in with facts with which some, but not necessarily all potential users, are already be familiar. Extracting or highlighting new information and key finding and recommendations is often an important first step towards ensuring that what MEL products have to tell busy managers in USAID, in country partner organizations and on Implementing Partner teams is absorbed and used. The remainder of this section illustrates a much wider range of tools for transforming MEL results into learning and better decisions.

| << Monitoring Evaluation Quality Over Time | Up | Reflect and Act on Monitoring Feedback >> |

ProjectStarterBETTER PROJECTS THROUGH IMPROVED |

A toolkit developed and implemented by: For more information, please contact Paul Fekete. |

Comment

Make a general inquiry or suggest an improvement.